Organizations are continuously inundated with vast amounts of data in today’s data-driven world. To gain meaningful insights and make informed decisions, efficient and scalable data pipelines are essential.

A data pipeline is a series of processes that extract, transform, and load (ETL) data from various sources into a centralized system for analysis and decision-making.

In this blog, we will explore the fundamental concepts of data pipeline development and demonstrate how to build robust pipelines.

Importance of Data Pipelines

Before getting into the technical aspects, let us understand the importance of data pipelines in modern data-driven enterprises. A well-designed data pipeline offers several benefits, including:

- Data Integration: Data pipelines allow businesses to integrate data from various sources such as databases, APIs, logs, and cloud services, creating a unified data repository.

- Real-time Processing: Real-time data pipelines enable organizations to process and analyze data as it arrives, enabling faster insights and timely decision-making.

- Scalability: Scalable data pipelines can handle increasing data volumes without performance degradation, accommodating the ever-growing data requirements.

- Data Quality and Consistency: Data pipelines facilitate data cleaning and transformation, ensuring high data quality and consistency across the organization.

- Time and Cost Efficiency: Automated data pipelines reduce the manual effort required for data processing, leading to cost and time savings.

Building the Foundation: Data Pipeline Components

A data pipeline comprises several essential components, each with a specific role in the data processing and analysis process:

- Data Sources: Identify and access the various data sources from which you will be extracting data. This can include databases, logs, cloud storage, APIs, and more.

- Data Extraction: Extract data from the identified sources. This step involves fetching data and loading it into the pipeline for further processing.

- Data Transformation: Clean, enrich, and transform the data into a format suitable for analysis. This phase is crucial for maintaining data consistency and integrity.

- Data Loading: Load the processed data into a target data store or warehouse, where it can be accessed for analysis and reporting.

Implementing Data Pipelines with Python

Python has emerged as a popular language for building data pipelines due to its simplicity, extensive libraries, and community support. Let’s explore a simple example of a data pipeline for processing and analyzing e-commerce sales data.

Python

# Import required libraries

import pandas as pd

# Data Source: CSV file containing e-commerce sales data

data_source = “ecommerce_sales.csv”

# Data Extraction

def extract_data(source):

return pd.read_csv(source)

# Data Transformation

def transform_data(data):

# Perform necessary data cleaning and transformation

data[‘date’] = pd.to_datetime(data[‘date’])

data[‘revenue’] = data[‘quantity’] * data[‘unit_price’]

return data

# Data Loading

def load_data(data):

# Assuming a database as the target data store

connection_string = “your_database_connection_string”

data.to_sql(name=’ecommerce_sales’, con=connection_string, if_exists=’replace’, index=False)

if __name__ == “__main__”:

raw_data = extract_data(data_source)

processed_data = transform_data(raw_data)

load_data(processed_data)

In this example, we use Pandas, a powerful Python library for data manipulation, to extract, transform, and load the e-commerce sales data. The extract_data() function reads the data from a CSV file, transform_data() cleans and enriches the data, and load_data() loads the processed data into a database.

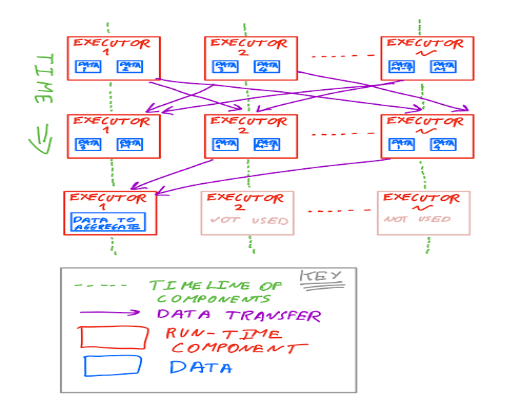

Enhancing Scalability with Apache Spark

While Python is suitable for small to medium-sized datasets, large-scale data processing demands a more robust solution. Apache Spark, an open-source distributed computing system, is ideal for processing big data. Let’s extend our previous example using PySpark, the Python API for Apache Spark.

Python

# Import required libraries

from pyspark.sql import SparkSession

# Data Source: CSV file containing e-commerce sales data

data_source = “ecommerce_sales.csv”

# Create a SparkSession

spark = SparkSession.builder.appName(“EcommercePipeline”).getOrCreate()

# Data Extraction

def extract_data(source):

return spark.read.csv(source, header=True, inferSchema=True)

# Data Transformation

def transform_data(data):

# Perform necessary data cleaning and transformation

data = data.withColumn(“date”, data[“date”].cast(“date”))

data = data.withColumn(“revenue”, data[“quantity”] * data[“unit_price”])

return data

# Data Loading

def load_data(data):

# Assuming a database as the target data store

connection_string = “your_database_connection_string”

data.write.mode(“overwrite”).format(“jdbc”).option(“url”, connection_string).option(“dbtable”, “ecommerce_sales”).save()

if __name__ == “__main__”:

raw_data = extract_data(data_source)

processed_data = transform_data(raw_data)

load_data(processed_data)

In this enhanced version, we utilize PySpark to create a distributed processing environment, enabling our pipeline to handle large-scale data efficiently.

Managing Dependencies with Apache Airflow

As data pipelines grow in complexity and size, managing dependencies between different pipeline tasks becomes critical. Apache Airflow is an open-source platform designed for orchestrating complex workflows. Let’s integrate our data pipeline with Apache Airflow for better management and scheduling.

Python

# Import required libraries

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime, timedelta

# Data Source: CSV file containing e-commerce sales data

data_source = “ecommerce_sales.csv”

# Initialize the DAG

default_args = {

‘owner’: ‘data_pipeline_owner’,

‘depends_on_past’: False,

‘start_date’: datetime(2023, 7, 1),

’email_on_failure’: False,

’email_on_retry’: False,

‘retries’: 1,

‘retry_delay’: timedelta(minutes=5),

}

dag = DAG(‘ecommerce_data_pipeline’, default_args=default_args, schedule_interval=timedelta(days=1))

# Data Extraction

def extract_data():

return pd.read_csv(data_source)

# Data Transformation

def transform_data():

# Perform necessary data cleaning and transformation

data[‘date’] = pd.to_datetime(data[‘date’])

data[‘revenue’] = data[‘quantity’] * data[‘unit_price’]

return data

# Data Loading

def load_data():

# Assuming a database as the target data store

connection_string = “your_database_connection_string”

data.to_sql(name=’ecommerce_sales’, con=connection_string, if_exists=’replace’, index=False)

# Define the tasks for the DAG

task_extract_data = PythonOperator(task_id=’extract_data’, python_callable=extract_data, dag=dag)

task_transform_data = PythonOperator(task_id=’transform_data’, python_callable=transform_data, dag=dag)

task_load_data = PythonOperator(task_id=’load_data’, python_callable=load_data, dag=dag)

# Define the task dependencies

task_extract_data >> task_transform_data >> task_load_data

In this example, we define an Apache Airflow DAG with three tasks representing data extraction, transformation, and loading. The defined dependencies ensure that each task executes in the correct order.

Monitoring and Error Handling

Monitoring and handling errors in a data pipeline are crucial for ensuring its reliability and efficiency. Apache Airflow provides built-in mechanisms for monitoring tasks and managing errors. Additionally, various tools like Grafana and Prometheus can be integrated to provide real-time monitoring and alerting capabilities.

Conclusion

Data pipelines play a pivotal role in modern data-driven enterprises, enabling efficient and scalable data processing and analysis. In this article, we explored the fundamental concepts of data pipeline development and demonstrated how to build robust pipelines using Python and Apache Spark. We also discussed the importance of managing dependencies and monitoring data pipelines with Apache Airflow, ensuring the reliability and performance of the entire data processing workflow.

By incorporating best practices in data pipeline development, organizations can harness the power of their data, gain valuable insights, and make data-driven decisions that drive success and growth.

Remember, building data pipelines is an iterative process which requires you to continuously monitor and analyze your pipelines to identify areas for improvement, and always stay updated with the latest technologies and tools to ensure your data pipelines remain efficient and scalable in the ever-evolving world of data processing and analysis.

Add comment