In machine learning, particularly in classification tasks, the presence of imbalanced datasets is a common yet challenging obstacle that can significantly impair model performance. Such datasets feature a disproportionate distribution of classes, typically skewing heavily towards one class. This imbalance can lead to models that perform well on majority classes while failing to accurately identify or classify instances of the minority class. Given the critical implications in fields such as medical diagnosis, fraud detection, and beyond, addressing dataset imbalance is paramount. This blog aims to explore the nature of imbalanced datasets, their implications on model performance, and a variety of techniques to mitigate these issues, thus paving the way for more accurate and equitable machine learning models.

What are Imbalanced Datasets?

Definition and Implications

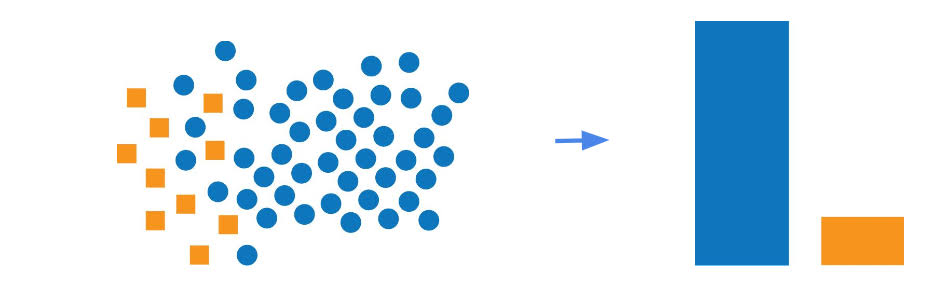

An imbalanced dataset in the context of a classification problem is one where the number of observations belonging to one class significantly outweighs those belonging to one or more other classes. This imbalance poses a significant challenge because most machine learning algorithms are designed to optimize for overall accuracy, which can be misleadingly high when the model simply predicts the majority class for all inputs.

A Hypothetical Example

Consider a medical dataset for predicting a rare disease; the dataset might contain 95% negative cases (no disease) and only 5% positive cases (disease present). Similarly, in fraud detection, legitimate transactions vastly outnumber fraudulent ones. These scenarios underscore the prevalence and criticality of addressing imbalanced datasets across various domains.

Evaluating Imbalance and Its Effects

Metrics for Imbalance

Accuracy alone is a misleading metric when dealing with imbalanced datasets. Metrics such as Precision, Recall, and the F1-Score offer a more nuanced view of model performance by considering the balance between how many selected items are relevant (precision) and how many relevant items are selected (recall). The F1-Score provides a harmonic mean of precision and recall, offering a single metric to assess the balance between them. The Confusion Matrix allows for a detailed breakdown of the model’s predictions across all classes, highlighting areas where the model might be underperforming.

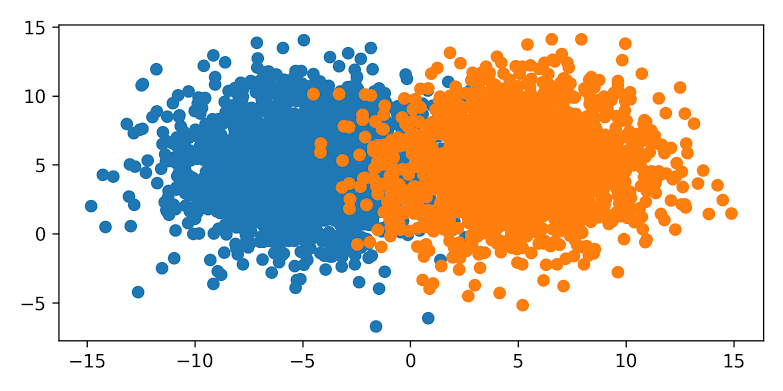

Visualizing Imbalance

Visualizing the class distribution can be an effective first step in understanding the extent of imbalance. Below is a Python code snippet using matplotlib and seaborn for visualizing the class distribution in a dataset:

python

import matplotlib.pyplot as plt

import seaborn as sns

def plot_class_distribution(y):

plt.figure(figsize=(10, 6))

sns.countplot(x=y).set_title(“Class Distribution”)

plt.show()

# Assuming `y` is your target variable from the dataset

# plot_class_distribution(y)

Resampling Techniques

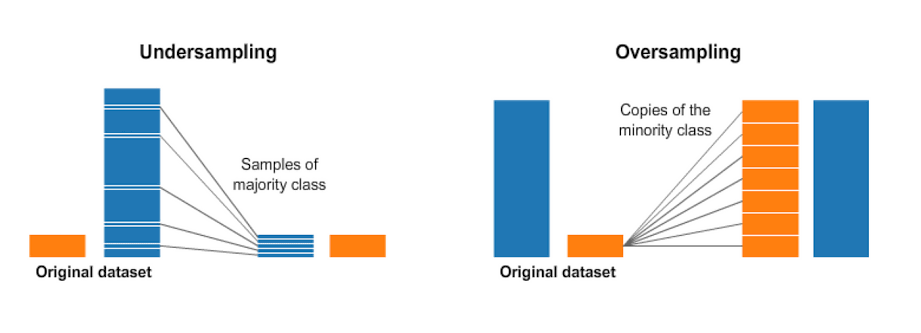

Oversampling the Minority Class

Oversampling involves increasing the number of instances in the minority class to balance the dataset. SMOTE (Synthetic Minority Over-sampling Technique) goes a step further by creating synthetic examples rather than just duplicating existing ones. Here’s how you can apply SMOTE using Python’s imblearn library:

python

from imblearn.over_sampling import SMOTE

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

smote = SMOTE(random_state=42)

X_res, y_res = smote.fit_resample(X_train, y_train)

Undersampling the Majority Class

Undersampling reduces the number of instances in the majority class, potentially leading to a loss of information. A balanced approach must be taken. Here is an example of using Random Undersampling:

python

from imblearn.under_sampling import RandomUnderSampler

rus = RandomUnderSampler(random_state=42)

X_res, y_res = rus.fit_resample(X_train, y_train)

Combined Approaches

A combination of oversampling the minority class and undersampling the majority class can sometimes yield the best results by balancing the dataset without losing significant information.

Algorithmic Approaches to Handle Imbalance

Cost-sensitive Learning

Adjusting the cost function to make the misclassification of minority classes more penalizing encourages the model to pay more attention to these classes. Many algorithms have a class_weight parameter that can be adjusted accordingly.

Ensemble Methods

Ensemble methods like Random Forests and boosting algorithms (e.g., XGBoost) can incorporate the class imbalance directly into their learning process. For example, adjusting the scale_pos_weight parameter in XGBoost can help in compensating for the imbalanced data:

python

from xgboost import XGBClassifier

model = XGBClassifier(scale_pos_weight = sum(negative_instances) / sum(positive_instances))

model.fit(X_res, y_res)

Advanced Techniques

Anomaly Detection

In cases of extreme imbalance, treating the problem as anomaly detection (where the minority class is treated as an anomaly) can be more effective.

Data Augmentation

For image or text data, creating additional synthetic data for the minority class can help balance the dataset. Techniques include image rotation/flipping for images or synonym replacement for text.

Transfer Learning

Utilizing a pre-trained model and fine-tuning it on your imbalanced dataset can leverage the knowledge the model has already acquired from a balanced and comprehensive dataset.

Best Practices and Considerations

Choosing the Right Metric

It’s crucial to choose metrics that accurately reflect the performance of your model in the context of an imbalanced dataset, such as the F1-Score or AUC-ROC, rather than defaulting to accuracy.

Cross-validation Strategies

Employing stratified K-fold cross-validation ensures each fold contains a representative ratio of each class, preserving the imbalance but ensuring it’s consistently represented:

python

from sklearn.model_selection import StratifiedKFold

X, y = # your data and target variables

skf = StratifiedKFold(n_splits=5)

for train_index, test_index in skf.split(X, y):

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

# Model training and evaluation here

Conclusion

As we conclude, it’s important to recognize that the field of machine learning is ever-evolving, with new methodologies and best practices continually emerging. The strategies outlined here lay a solid foundation, but the exploration shouldn’t stop here. Engaging with the broader community, staying abreast of the latest research, and persistently challenging your assumptions and models are essential practices for anyone looking to master the handling of imbalanced datasets.

In closing, the challenge of imbalanced datasets in classification problems is both a technical hurdle and an opportunity for innovation. By embracing the complexity of this issue, applying the techniques discussed, and continually seeking out new knowledge and approaches, you can significantly enhance the fairness, accuracy, and robustness of your machine learning models. This not only advances your own work but contributes to the broader goal of creating technology that is just, equitable, and reflective of the diverse world it serves.

Add comment