Decision tree is a popular supervised machine learning algorithm which can be used for both classification and regression related tasks. It uses a structured flowchart like approach which consists of root nodes, decision nodes, leaf nodes and branches to provide a clear visualization of the decision making process.

Decision tree in AI is a fundamental algorithm one should master when we start our machine learning journey. In this blog we explore in detail everything we need to know about the decision tree algorithm.

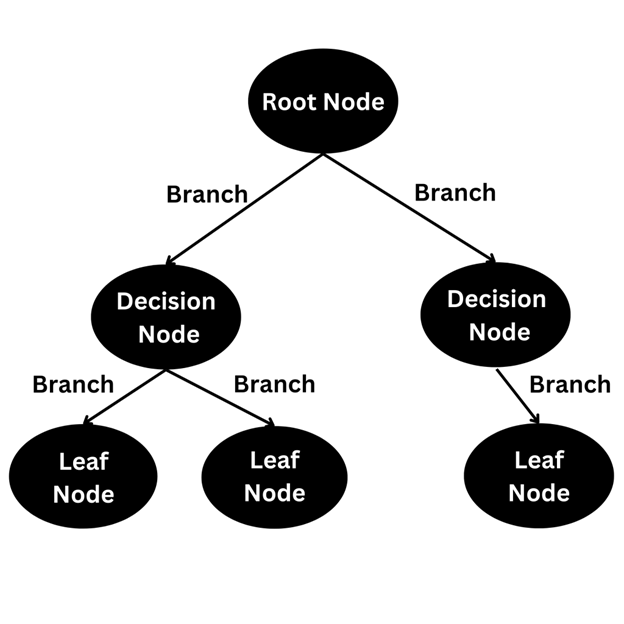

Components Of A Decision Tree

The biggest selling point of the decision tree is that visualization is easy to understand like a flowchart. It is made up of the following components

Root Node

This node sits at the very top, represents the entire dataset and further branches out.

Decision Node

These are internal nodes where the dataset gets further divided based on conditions. These have arrows coming into and heading out from them.

Leaf Node

These sit at the very bottom and have no more nodes attached to them.In a decision tree the leaf node represents a final outcome or decision. They have no arrows heading out from the.

Branches

These are arrows connecting different nodes based on decisions.

How does a Decision Tree work ?

Let us now understand in a concise manner how the decision tree in machine learning works

Choosing the root node

The first step is looking at the dataset and identifying a feature that maximizes gain or minimizes impurity. This is done using techniques such as Gini Index, Entropy, etc. Once identified the feature becomes the root node.

Recursive Splitting

We repeat the process of looking at the features in the dataset provided, using the same techniques to further split it into decision nodes. This process repeats until we iterate over the dataset.

Stopping Criteria

The process of iterating over the dataset stops when:

A node contains data points from a single class (pure node)

A maximum tree depth is reached

The subset is too small to justify further splits.

Prediction

Once trained, the algorithm passes the input data through the decision branches until it reaches a leaf node. The value or class of leaf node is then returned as a prediction.

Attribute Selection Measures In Decision Tree

As with any machine learning algorithm, the most important step in a decision tree algorithm is attribute selection. The common measures used to select attributes are

Gini Index

The Gini Index measures impurity in a dataset. It ranges from 0 (perfectly pure, all data belongs to one class) to 1 (completely impure). The algorithm selects the split that minimizes the Gini Index, ensuring each subset is as pure as possible.

Information Gain

Entropy measures the randomness or disorder in the data, with lower entropy indicating higher purity. Based on the concept of entropy, Information Gain quantifies the reduction in uncertainty after splitting. A split with higher Information Gain is preferred.

Gain Ratio

While Information Gain works well, it can favor attributes with many unique values. Gain Ratio normalizes Information Gain by considering the intrinsic information of a split, making it more robust for datasets with categorical features.

Types Of Decision Trees

There are two primary types of problems where decision tree algorithm can be applied

Classification Decision Tree

Classification trees are used to answer Yes or No type problems. They are applied when the target variable is categorical in nature and used to find if an event happened or not. For example if an email is spam or not, if a credit card transaction is fraud or not.

Regression Decision Tree

Regression trees are used when the target variable is continuous on nature. They are more likely used for problems that aim to predict a future value based on previous data. For example, predict the prices of houses based on size, location, age.

Pruning Techniques In Decision Tree

The objective of pruning a decision tree is to reduce the complexity of the tree to prevent overfitting. This is done by removing sections of the tree that are non-critical and redundant in the decision making process.

Pruning aims to improve the predictive accuracy of the final classifier. There are two main approaches to pruning

Pre-pruning (Early Stopping)

Pre-pruning stops the tree building process early based on specific conditions such as maximum depth, minimum information gain. It is much more efficient since it avoids constructing the entire tree. The major drawback of this approach is the horizon effet where early termination prevents the algorithm from exploring splits that may be of value.

Post-pruning ( Simplifying the Tree)

Post-pruning starts with a fully grown tree and simplifies it by removing nodes or subtrees that contribute little to prediction accuracy. This process can reduce the model’s size while improving generalization.

Pruning techniques can be applied using the top-down or bottom-up approach. In the bottom-up approach the procedure starts at the last node in the tree and moves upwards determining the relevance of each node. In the top-down approach the pruning starts at the root node and follows the structure downwards to perform relevance check/

Reduced error pruning and cost complexity pruning are two key pruning algorithms for decision trees in machine learning.

Implementing Decision Trees in Python

Python’s Scikit-learn library makes it simple to build and visualize Decision Trees. Let’s explore the process.

Using Scikit-learn for Decision Tree Classification

Scikit-learn provides the DecisionTreeClassifier for classification tasks. The high level steps involved in this process are

Step1: Import the required libraries.

Step 2: Load and preprocess your dataset.

Step 3: Train the Decision Tree model.

Step 4: Evaluate its performance using metrics like accuracy.

Data Preparation and Model Training For Decision Tree

Before training a Decision Tree, we need to ensure that our data is:

Cleaned: Handle missing values and outliers.

Encoded: Convert categorical data to numerical values.

Split: Divide the dataset into training and testing subsets.

Visualizing Decision Trees

Scikit-learn offers methods like plot_tree to visualize the Decision Tree structure, helping in interpretation and debugging.

Advantages and Disadvantages of Decision Trees

Let us now understand the advantages of decision trees and also the issues in decision tree algorithms.

Pros of Using Decision Trees

Interpretability: Easy to understand and explain.

Versatility: Can handle both categorical and numerical data.

No Scaling Required: Doesn’t require feature scaling or normalization.

Feature Selection: Automatically selects the most important features.

Cons and Limitations

Overfitting: Prone to overfitting on noisy data without pruning.

Bias: Sensitive to small changes in data, which can lead to different tree structures.

Computational Cost: Can become computationally expensive for large datasets.

When to Use Decision Trees

Decision Trees are versatile and can be applied to both classification and regression problems. Let us explore some key situations where decision trees standout.

When Interpretability is Crucial

Decision Trees offer a clear, human-readable structure that makes them ideal for applications where explainability is essential.For example credit scoring or answering yes/no problems

For Small to Medium-Sized Datasets

Decision Trees work efficiently on smaller datasets without requiring extensive computational resources. On larger datasets, trees may become complex and harder to interpret unless used within ensemble methods.

Working with Mixed Data Types

Decision Trees can handle both numerical and categorical features without requiring extensive preprocessing.

Some good examples are Customer segmentation (numerical income and categorical preferences) or risk assessment models.

For Non-linear Relationships

Decision Trees can capture complex, non-linear relationships between features and target variables, making them a good choice for datasets with such patterns.

Quick Prototyping and Baseline Models

Decision Trees are fast to train and implement, making them excellent for quick prototyping or establishing a baseline model. Initial exploration of new datasets to gain insights into patterns and feature importance.

Applications in Rule-Based Systems

In applications where decision-making must follow explicit, rule-based logic, Decision Trees provide a natural fit. Fraud detection systems, loan approval workflows, or recommendation systems are some good examples.

Advanced Concepts and Optimizations In Decision Tree

Now that we understand the basics of decision trees let us explore some advanced concepts & optimization techniques.

Ensemble Methods

Ensemble techniques improve the performance of Decision Trees by combining multiple trees. It’s like having multiple experts work together to provide a better final answer. Let us understand Random forest and boosting, two examples of ensemble methods in decision trees.

Random Forests

Random Forests work by constructing a collection (or “forest”) of Decision Trees during training and aggregating their predictions for better accuracy and stability.

How Random Forests Work ?

- Bootstrap Aggregation (Bagging): Each tree in the Random Forest is trained on a different random subset of the training data, drawn with replacement (bootstrap sampling). This introduces diversity among the trees.

- Feature Randomness: At each split, the algorithm considers a random subset of features instead of evaluating all features. This further reduces correlation between trees.

- Prediction: For classification tasks, Random Forests use majority voting across trees. For regression, they average the predictions.

Advantages Of Random Forests:

Reduced Overfitting: By averaging predictions from multiple trees, Random Forests lower the variance, making them less prone to overfitting than individual Decision Trees.

Feature Importance: They provide insights into feature significance, making them valuable for exploratory data analysis.

Boosting

The goal of boosting is to build an ensemble of decision trees where each tree aims to correct the mistakes of its predecessor. It’s a sequential technique that builds an ensemble of Decision Trees. Boosting focuses on reducing bias rather than variance.

How Boosting Work ?

- Weak Learners: Typically starts with shallow Decision Trees (weak learners) that individually have limited predictive power.

- Weighted Learning: The algorithm assigns weights to training samples, emphasizing the ones misclassified by previous trees.

- Sequential Improvement: Each subsequent tree is trained to minimize the error of the ensemble. The process continues until a predefined stopping criterion is met (e.g., number of trees or error threshold).

- Prediction: Predictions are combined in a weighted manner to create a strong final model.

Popular Boosting Algorithms

AdaBoost: Adds weight to misclassified samples and adjusts the final prediction based on the performance of each tree.

Gradient Boosting: Optimizes a loss function by sequentially fitting trees to the negative gradient of the error. This makes it highly flexible and effective for both classification and regression.

Advantages Of Boosting

Improved Accuracy: Boosting often outperforms bagging methods like Random Forests on structured datasets.

Customizable Objective: Gradient Boosting allows optimization for various loss functions, making it very versatile.

Handling Overfitting in Decision Trees

Overfitting is a big challenge in decision tree algorithms, as they tend to grow deep and overly complex. This often leads to the model performing poorly on unseen data. One effective way to address this is by applying pruning techniques. Pruning simplifies the tree by removing unwanted parts of the decision tree.

Another way to tackle overfitting is to impose constraints during training. We can limit the tree by setting hyperparameters such as max_depth, min_samples_split, or min_samples_leaf, ensuring it remains manageable and avoids splitting on trivial patterns. These constraints prevent the model from becoming overly specific to the training data while maintaining enough flexibility to capture essential relationships.

Last but not the least, improving the dataset itself can also reduce overfitting. Larger and more diverse datasets provide the model with a better representation of underlying patterns, making it harder for the tree to focus on outliers or noise.

Hyperparameter Tuning for Better Performance

Optimizing hyperparameters leads to better models in most cases. Some common hyperparameters include

max_depth: Limits the depth of the tree.

min_samples_split: Minimum samples required to split a node.

criterion: Selection metric (e.g., Gini Index or Entropy).

There are three different techniques to find optimal hyperparameters for decision trees

Grid Search, Randomized Grid Search and Bayesian Optimizer.

In conclusion, Decision Trees in ai are powerful and due to their visual appeal make them a powerful tool in any machine learning engineers repertoire. Their limitations that can be addressed through pruning, ensemble methods, and proper hyperparameter tuning. By understanding the components, process, and advanced optimizations ensures we can effectively apply Decision Trees to real-world problems.

Add comment