In today’s digital age, organisations are generating and processing an enormous amount of data every day. This data is generated from various sources such as social media, IoT devices, transactional systems, and many more.

To gain valuable insights from this vast amount of data, organisations are turning to big data analytics platforms such as Apache Spark.

Apache Spark is an open-source distributed computing framework that provides an interface for programming entire clusters with implicit data parallelism and fault tolerance.

It is designed to handle large-scale data processing tasks and provides efficient and fast processing of large datasets. Apache Spark also offers an interactive shell for data processing and query optimization.

In this blog, we will focus on optimising big data queries with Apache Spark.

Understanding Query Optimization in Apache Spark

Query optimization is the process of selecting the most efficient query execution plan for a given query. Apache Spark optimises queries using a cost-based optimizer that estimates the cost of executing a query plan based on statistics and the current state of the cluster. The optimizer uses this cost estimate to choose the most efficient plan for the query.

The query optimization process in Apache Spark includes the following steps:

Parsing:

The input query is parsed and converted into an abstract syntax tree (AST).

Analysis: The AST is analysed to check for syntax errors and to resolve references to tables and columns.

Logical Optimization:

The optimizer applies various logical transformations to the AST to produce an optimised logical plan.

Physical Planning:

The logical plan is converted into a physical plan that specifies how the query will be executed on the cluster.

Execution:

The physical plan is executed on the cluster to produce the result.

Optimising Big Data Queries with Apache Spark

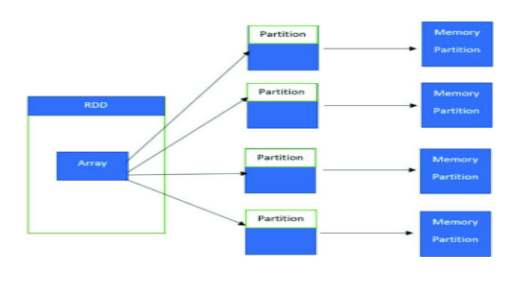

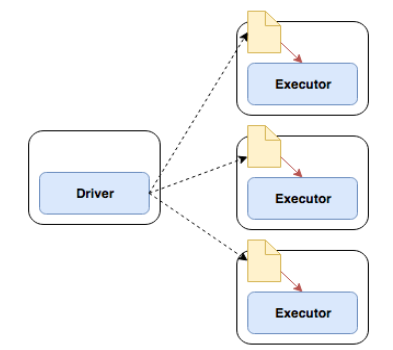

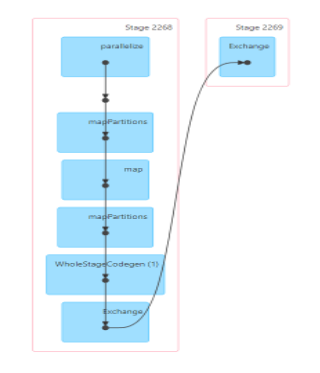

To optimise big data queries with Apache Spark, we need to understand how Spark processes data. Spark uses a distributed computing model where data is partitioned and processed in parallel across a cluster of machines. This parallel processing allows Spark to handle large datasets efficiently.

Here are some tips for optimising big data queries with Apache Spark:

Partitioning

Partitioning data correctly is crucial for query performance in Spark. When Spark processes data, it distributes the data across multiple partitions, and each partition is processed in parallel.

If the data is not partitioned correctly, it can lead to data skew, where some partitions have much more data than others, leading to uneven workload distribution and slower query performance.

In this example, we have a dataset of user logs that we want to process in parallel using Spark. However, the data is not partitioned, which can lead to uneven workload distribution and slower query performance.

We can partition the data by user ID to ensure that each partition has roughly the same amount of data.

from pyspark.sql.functions import *

# Load data

logs = spark.read.csv(“user_logs.csv”, header=True, inferSchema=True)

# Partition data by user ID

partitioned_logs = logs.repartition(col(“user_id”))

Caching

Caching is a technique where Spark stores data in memory for faster access. Caching can be useful for frequently accessed data or data that is used multiple times in a query. By caching data, Spark can avoid re-reading the same data from disk multiple times, leading to faster query performance.

In this example, we want to calculate the average age of users in a large dataset. We can cache the data to avoid re-reading the same data from the disk multiple times.

from pyspark.sql.functions import *

# Load data

users = spark.read.csv(“users.csv”, header=True, inferSchema=True)

# Cache data

users.cache()

# Calculate average age

avg_age = users.select(avg(col(“age”)))

Broadcast Join

Joining large tables in Spark can be expensive because it requires shuffling data across the cluster. However, if one of the tables is small enough to fit in memory, Spark can use a broadcast join, where the smaller table is broadcast to every node in the cluster, reducing the amount of data that needs to be shuffled.

In this example, we want to join a large table of user data with a smaller table of user preferences. Since the user preferences table is small enough to fit in memory, we can use a broadcast join to reduce the amount of data that needs to be shuffled.

from pyspark.sql.functions import *

# Load data

users = spark.read.csv(“users.csv”, header=True, inferSchema=True)

user_prefs = spark.read.csv(“user_prefs.csv”, header=True, inferSchema=True)

# Broadcast join

joined_data = users.join(broadcast(user_prefs), on=”user_id”, how=”left”)

Filter Pushdown

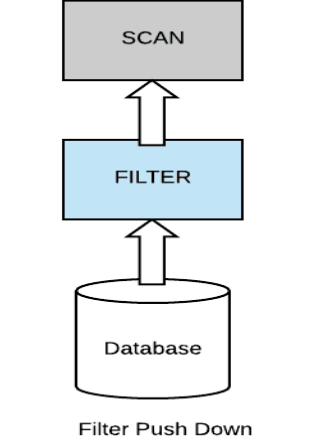

Filter pushdown is a technique where Spark pushes filter operations down to the data source. This reduces the amount of data that needs to be transferred across the cluster, leading to faster query performance.

In this example, we want to filter a large dataset of user logs to only include logs from a specific date range. We can push the filter operation down to the data source to reduce the amount of data that needs to be transferred across the cluster.

from pyspark.sql.functions import *

# Load data

logs = spark.read.csv(“user_logs.csv”, header=True, inferSchema=True)

# Filter pushdown

filtered_logs = logs.filter((col(“date”) >= “2022-01-01”) & (col(“date”) <= “2022-01-31”))

Coalesce and Repartition

Coalesce and repartition are operations that allow you to control the number of partitions in your data. By reducing the number of partitions, you can reduce the overhead of processing and shuffling data across the cluster, leading to faster query performance.

Let’s look at some code examples to see how these techniques can be used to optimise big data queries in Spark.

In this example, we want to coalesce a large dataset of user logs to reduce the number of partitions and optimise query performance.

from pyspark.sql.functions import *

# Load data

logs = spark.read.csv(“user_logs.csv”, header=True, inferSchema=True)

# Coalesce data

coalesced_logs = logs.coalesce(4)

Conclusion

Apache Spark provides a powerful platform for processing and analyzing large datasets. However, to achieve optimal query performance, it’s essential to understand how Spark processes data and to use best practices for query optimization.

In this blog, we discussed some tips for optimising big data queries with Apache Spark, including partitioning, caching, broadcast joins, filter pushdown, and coalescing and repartitioning. By using these techniques, you can significantly improve the performance of your big data queries in Spark.

Add comment